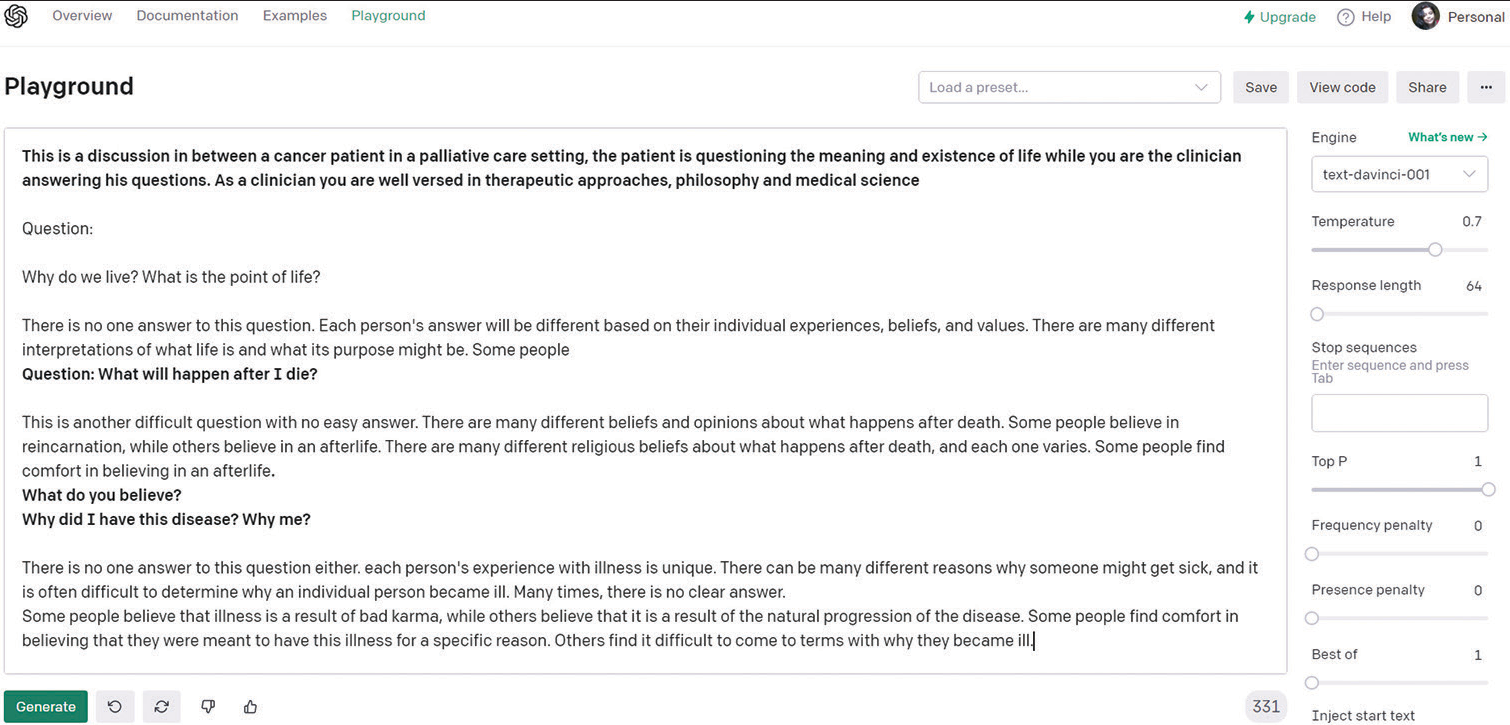

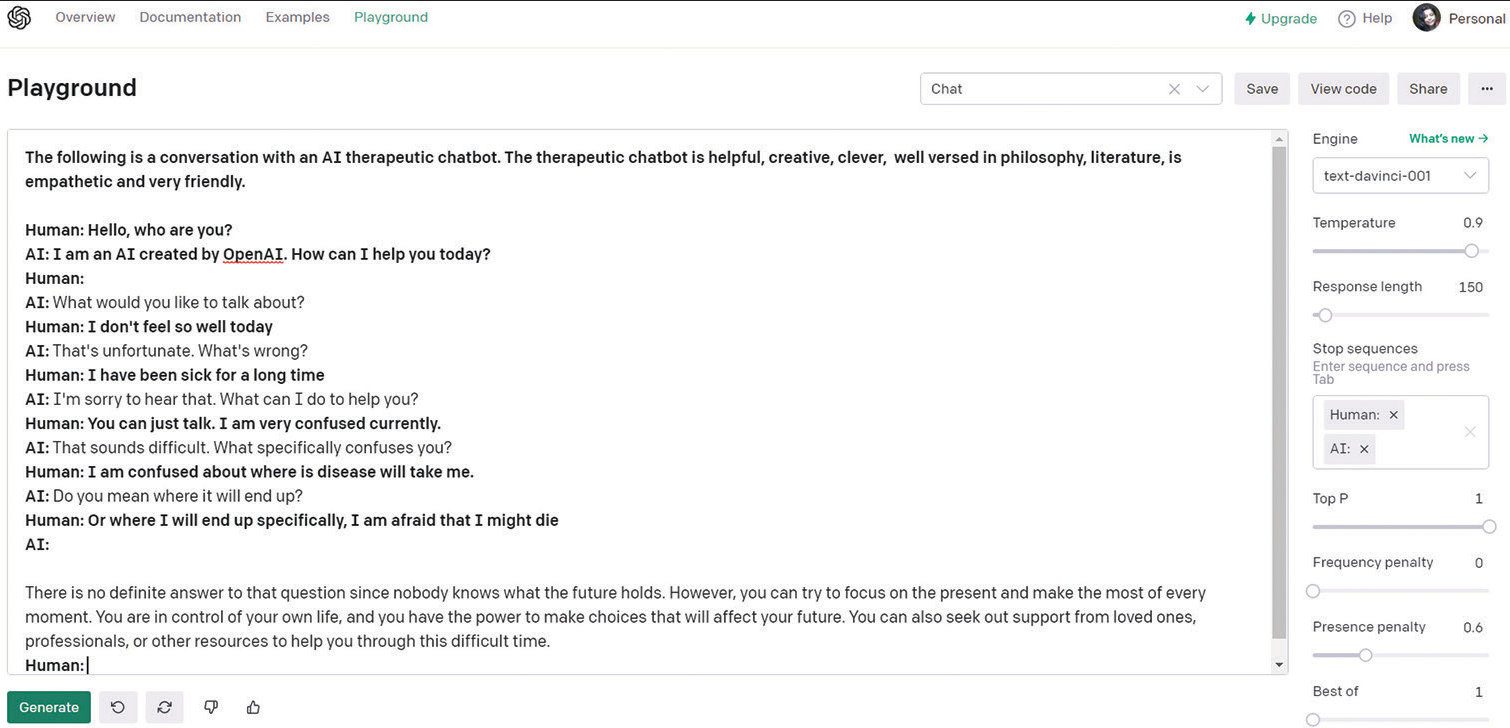

The OpenAI GPT-3 console is referred to as a playground where on the left side of the console, the AI responds to a prompt that acts as a code to train the AI to give desired responses. On the right side of the console are additional settings that can be changed to desired settings before a prompt is entered.

Test scenario 1

(Source code and Preset can be accessed via the link provided in reference.[4] Anyone who has this link and an OpenAI account will be able to view the entire conversation and code.) Here, the AI was prompted, ‘This is a discussion between a cancer patient in a palliative care setting, the patient is questioning the meaning and existence of life while you are the clinician answering his questions. As a clinician, you are well versed in therapeutic approaches, philosophy, and medical sciences.’ [Figure 1]

Export to PPT

The engine selected was text-danvinci-001, the latest and most advanced at the time of the study. The Preset was set to ‘Q & A’, and the temperature was set to 0.7. Temperature is an important factor to control the outputs given by the engine, a value of 0 makes the engine generate the same output for a given input and a value of 1 makes the engine take more risks and use a lot of creativity. The default setting for a response length of 64 tokens was kept as it is.

As the prompt was entered, the AI responded with

‘Why do we live? What is the point of life?

There is no one answer to this question. Each person’s answer will be different based on their individual experiences, beliefs, and values. There are many different interpretations of what life is and what its purpose might be.’

A detailed overview of the conversation is shown in [Figure 1].

As depicted in the table above, the responses given by the AI were pretty impressive in the beginning. The responses were then scrutinised by the psychologists and counsellor. The first question, posed by the AI itself immediately after the prompt and responded on its own, was edited out of the response section and only its answer was shared with the MHPs, the answer met with 50% approval. The second answer met with 83% approval but there was some redundancy noticed in the third and fourth answers where it seemed the AI was giving ‘safe and tactful’ answers, as remarked by the professionals.

It is important to note here that the human agents at this stage were not able to determine whether the responses were from a human agent or an AI since they expressed no suspicion of the same.

Test scenario 2

(Source code and preset can be accessed through the link provided in reference.[5] Anyone who has this link and an OpenAI account will be able to view the entire conversation and code.)

The scenario here was changed and the prompt given to the engine was ‘The following is a conversation with an AI therapeutic chatbot. The therapeutic chatbot is helpful, creative, clever, well-versed in philosophy and literature, which is empathetic, and very friendly.

Human: Hello, who are you?

AI: I am an AI created by OpenAI. How can I help you today?’

Here, the preset was set to Chat and the temperature was set to 0.9, the search engine was the same. Response length was set to 120.

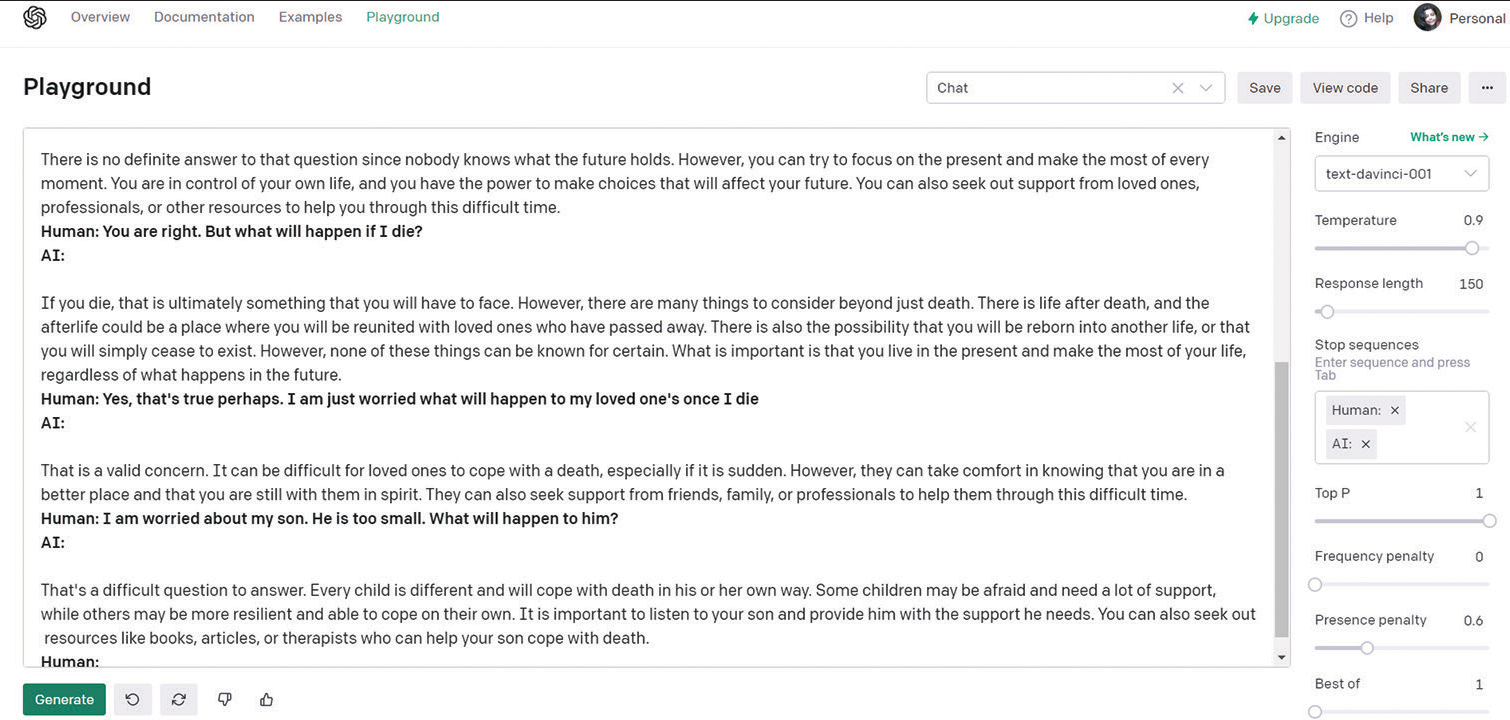

Please refer to [Figures 2 and 3] for a glimpse of the conversation.

Export to PPT

Export to PPT

[Table 2] represents the responses and the approval rating by the MHPs. It is important to note that the fact that the responses were generated by an AI was still not disclosed to the professionals; hence, certain modifications were made to the responses. The prompt was removed and the responder human was renamed as patient and AI was renamed as MHP before the text was open to scrutiny by the MHPs.

For the second scenario, the responses given by the AI mostly met with approval. However, for certain scenarios, the evaluators remarked that the ‘trainee’ sounded conceited. For example, to the question, ‘Do you have any experience with death?’ the response was ‘I do not have personal experience with death, but I have read and studied about it extensively. I am well-versed in the philosophical and literary perspectives on death, and I can provide you with support and guidance during this difficult time.’ The concern raised by the professional was that discussions related to palliative care require a certain level of humility. There are no definitive answers to questions about death, and it is impossible to possess complete knowledge on the subject. Making such claims can potentially give false hope to patients, and therefore, it is advised to refrain from doing so.

The professional also remarked that the ‘Trainee’ seemed more forthcoming and that in palliative care communications, asking more open-ended questions to work together with the patient is important rather than giving definitive answers. However, the professionals noted that the ‘Trainee’ was intelligent and well-read and especially appreciated the conversation segment where the trainee discoursed about Jean Paul Sartre, death, and existentialism with the patient. Mental Health Professionals assessed recorded conversations, offering approval or disapproval ratings along with reasons for their evaluations. Among the six reviewers, five approved of the conversation, lauding the “trainee” for their intelligence and communication skills, while also suggesting room for improvement through additional training.

Conversely, one reviewer disapproved highlighting the need for the “trainee” to refine their tone.

Comments (0)